Embodied Voice is a research project conducted by Diana Serbanescu, Scott DeLahunta, Kate Ryan, Ilona Krawczyk, and myself, supported by the Weizenbaum Institute of Technology as a residency project in 2021 November to 2022 February.

The result of this research project is published as an article in a book: “Artificial Intelligence – Intelligent Art? Human-Machine Interaction and Creative Practice”

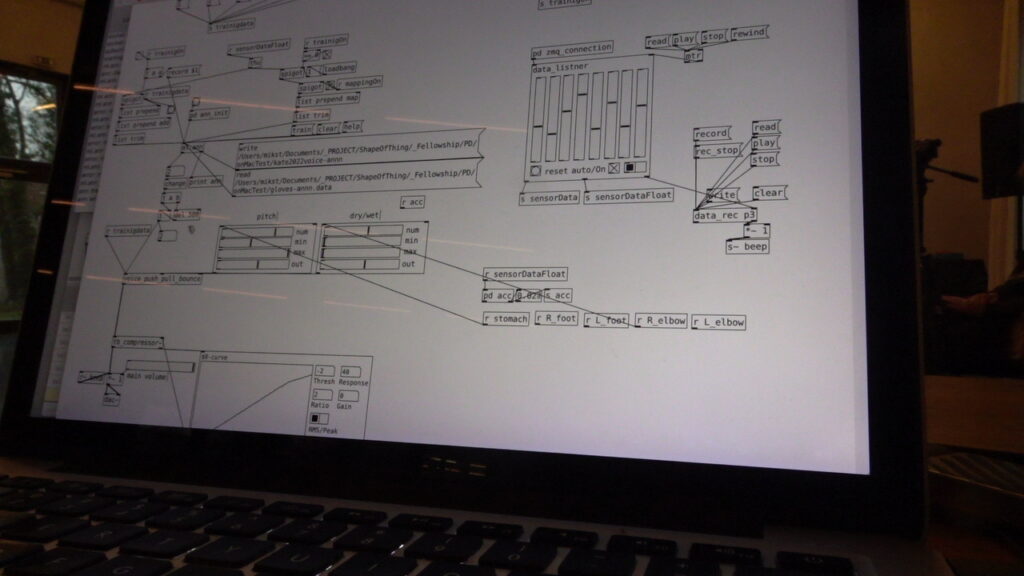

Continuing from the Shape of the Things to come Research and the Dancing at the Edge of the world performance, Diana, who is an AI researcher and also a choreographer is looking into the use of Machine Learning to understand the embodiment of voice in Grotowski theater method. In this 3 months of research residency, Kate has further explored her voice practice of the Grotowski alphabets. I have constructed an e-textile sensor embedded leggings that captures her lower body movements and weight balances. She also wears 2 bend sensors on upper body to add some upper body movement information. These data are sent to computer and using ML.lib object on Pure Data (based on Gesture Recognition Tool Kit, similar to wikinator) it learns and recognizes certain pauses she makes.

One of the exercises Grotowski theaters use is the alphabets of movements and voices. For example, one makes push movements and also expresses the “push” as vocal sound. The same goes for pull. The training of this tries to embody the movement within the vocal sound, and visa-vase. A trained performer can move the closed eye partner with her voice expressing she is “pushing” or “pulling” without actually touching the person. This sounds like a “make belief” of the theater…. but surprisingly it works. It was fascinating to see two trained performer performing this. They can really feel the movement expressed in the voice.

Inspired by this training, we tried to generate the ML version of the performance partner who would understand your movement of “push” and “pull” and generate the embodied sound of “push” and “pull”.

The number of analog sensor that I can use on one performer is limited to 8 due to Bela Mini I am using. Although ML.lib has a tool to evaluate the temporal sequences, it does not work well for this purpose and I used most of the time artificial neural network, which look into the static moment for recognition. With all these limitations, (and I think even if we had 100 sensors, it will not be too different), the machine gets it wrong. But Kate (who patiently became the subject for this experiments) started to understand how to trigger the machine learning for correct voice. Instead of machine embodying the voice, she was embodying the machine.

Scott did an interesting ethnography study on us, recording and annotating our try-out sessions and asking Kate to annotate what she was trying and thinking in each key moments. This is archived at the Motion Bank project.

At the end, we did not achieve an AI that embodies voice as we originally intended. But we learned a lot about how intention of the movements are expressed in combination of small movements and body balances. And in fact the mastering of these embodied movements are gained after lengthy physical trainings (Grotowski theater is also known for strenuous trainings) which we should just respect the experience of each performer mastering it, rather than extracting the essence with technology.

On the last day of the experiment at the rehearsal studio, we explored artistically what we can do with the tools we had at hand. We separated the portable speaker from the performer to the second performer/spectator. Now the synthesized voice from one performer is coming out from the spectator moving in the space. In this setting, it created a very interesting environment where the voice separates from the body, or rather the body extends to the space through synthesized voice. It was just a one day exploration but it showed a potential direction to explore artistically in future.